Project Abstract

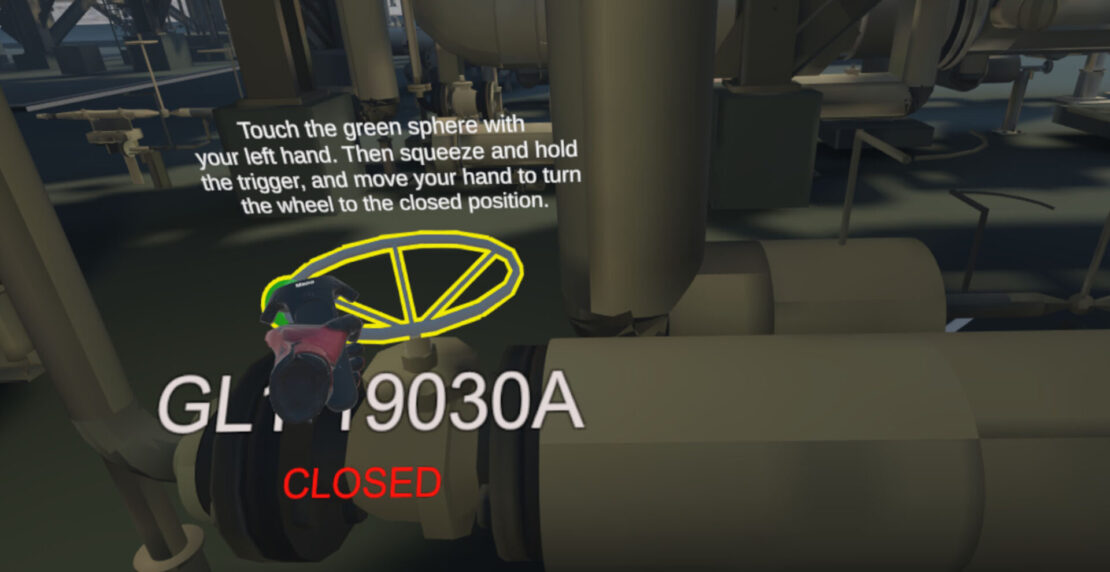

Our client, Cameron LNG, and their partner Mitsui & Co., Inc. came to us with the challenge of creating a VR application that allows their operators to perform procedural tasks, like opening and closing valves, within their liquefaction facility. The 3D file for this facility was created in Navisworks. Navisworks files contain an index of all 3D objects and store specific data for each of those objects for engineering design and construction purposes.

While more compact than CAD, Navisworks files remain too large to directly import into a dynamic rendering environment. The facility file consisted of tens of thousands of objects that all contained unique data, data that had to remain intact and be dynamically accessed in the Virtual Reality experience. This presented a unique problem, as the file contents were far too complex to run smoothly in a dynamic rendering environment like Virtual Reality.

Additionally, collision and lighting data had to be calculated, not only for the 3d objects, but for the environment and user, dynamically, so that when users navigate the entire facility in virtual space, visual fidelity is maintained. Calculating high resolution lightmaps at this scale presents its own completely unique set of challenges regarding memory, time, and overall visual quality. A virtual reality experience is only as immersive as your surroundings, and lighting quality is crucial.

Solution

Efficiently reduced asset complexity with a hybrid system involving both manually optimized geometry, and procedurally optimized geometry that is calculated, created, and placed when the simulation begins.

Implemented a batched lighting system that automatically calculates new lighting for any area that has changed but it also not currently being edited. To reduce the memory footprint and save time, large areas with unbroken shadows were removed from the lighting computation process and shadow strengths were manually set.

Created a framework of data triggers that allows users to reference any inspected objects corresponding data real time, without ever having to load all of the object data for the whole scene.

Integrate procedure-based activities that simulate real world actions at real world scale.

Track activity progress, completion status, and success/failure conditions.